目标:一键批量把 word 转为 html

优势:可嵌入到项目里,通过命令执行;可本地直接双击执行;可定制化;

实现思路:

- 通过 pywin32 调用本地的 word 程序把 .docx 格式的文档转存为筛选过的网页(.htm,.html)

- 用 html.parser 模块解析读取的html, 通过 re 模块的正则替换删除冗余内容,通过 bs4 模块对 html 进行其他优化处理

- 用 codecs 模块保存优化后的 html

- 用 pyinstaller(或cxfreeze, pyinstaller打包出的可执行程序更稳定) 将 优化后的 html 打包成 word2html.exe 可执行内容

- 将打包后的内容放在项目目录下,并在项目目录下的 package.json 的 scripts 里配置

start word2html.exe,使程序可在项目中运行

准备工作:

0. python 学习

python文档

廖雪峰Python教程

1. 搭建 python 环境

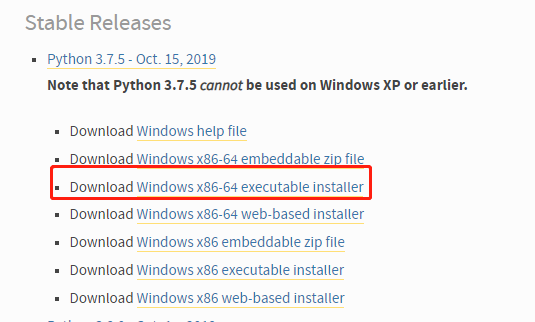

window 环境下去 官网 下载与电脑位数匹配的 python 版本,如下,我下载的是64位的 python 3.7.5

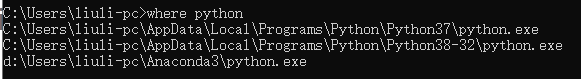

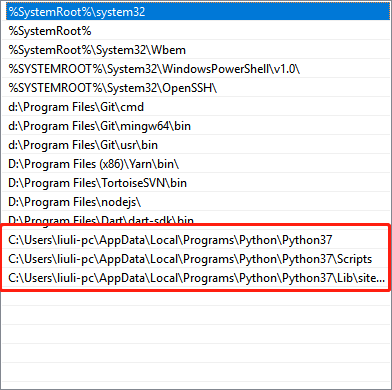

命令行输入 where python 查询 python 安装目录并配置环境变量

2. 下载 PyCharm 方便编写 python 程序

3. 下载安装第三方模块 Python Packaging User Guide

pywin32

注意点:要下载python对应,与电脑位数64位相同的版本,如我下载的是:64位的,对应python3.7版本的

Python 正则表达式,安装 re:pip install re

Beautiful Soup

cchardet

4. 安装打包插件 cx-Freezepip install cx-Freeze[==版本号]

cx_freeze安装参考

5. 代码实现

word2html1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146# -*- coding: utf-8 -*-

import codecs

from win32com import client as wc

import re

import chardet

from bs4 import BeautifulSoup, Comment

import os

def init():

path = os.getcwd() # 文件夹目录

files = os.listdir(path) # 得到文件夹下的所有文件名称

html_dir = os.path.join(path, 'html')

try:

os.mkdir(html_dir)

except FileExistsError as e:

print(e)

for file in files: # 遍历文件夹

if os.path.isfile(file) and file.split('.')[1] == 'docx':

main(path, html_dir, file)

def main(word_dir, html_dir, word_name):

html_name = word_name.split('.')[0] + '.html'

# html_name1 = word_name.split('.')[0] + '1.html'

html = word_to_html(os.path.join(word_dir, word_name), os.path.join(html_dir, html_name))

res = format_html(html)

html = my_beautiful_soup(res)

html = re.sub(r'<strong>\s{0,}</strong>', r'', html) # 空的strong标签

html = re.sub(r' {2,}', r' ', html) # 去空格

html = re.sub(r'\s+', r' ', html) # 多个空格合并成一个

save_file(html, os.path.join(html_dir, html_name))

# doc_path - word 文档地址 export_path - 导出的 html 地址

def word_to_html(doc_path, export_path):

try:

word = wc.Dispatch('Word.Application')

doc = word.Documents.Open(doc_path)

doc.SaveAs(export_path, 10)

finally:

if('doc' in dir()) and doc.Close:

doc.Close()

if('word' in dir()) and word.Quit:

word.Quit()

f = open(export_path, 'r')

str = f.read()

f.close()

return str

# 格式化html

# res - 导出的 html

def format_html(res):

res = re.sub(r'<html.*?>', r'<!DOCTYPE html>', res)

res = re.sub(r'\s+', r' ', res) # 多个空格合并成一个

res = re.sub(r'\s+>', r'>', res) # 标签结尾的空格

res = re.sub(r'>\s+<', r'><', res) # 去除标签之间的空格

res = re.sub(r'<font.*?>(.*?)<\/font>', r'\1', res) # 去除<font></font>

res = re.sub(r'<ins.*?<\/ins>', r'', res) # 去除<ins></ins>

res = re.sub(r'<u>(.*?)<\/u>', r'\1', res) # 去除<u></u>

res = re.sub(r'<o(.*?)<\/o.*?>', r'', res) # 去除<o:p></o:p>

res = re.sub(r'<b>(.*?)<\/b>', r'<strong>\1</strong>', res)

return res

def my_beautiful_soup(html):

soup = BeautifulSoup(html, "html5lib")

head_tag = soup.new_tag('head')

meta1 = soup.new_tag('meta', attrs={'name': 'viewport', 'content': 'width=device-width,initial-scale=1,maximum-scale=1,minimum-scale=1,user-scalable=no'})

meta2 = soup.new_tag('meta', attrs={'content': 'text/html', 'charset': 'utf-8', 'http-equiv': 'Content-Type'})

title = soup.new_tag('title')

head_tag.append(meta1)

head_tag.append(meta2)

head_tag.append(title)

soup.head.replace_with(head_tag)

# 遍历节点

for tag in soup.find_all(True):

# 删除 class

del tag['class']

if not tag.get('style') is None:

tag_style = tag['style']

del tag['style'] # 删除元素的 style

# 增加加粗样式

# if ('font-weight:bold' in tag_style) and (not tag.string is None):

# strong_tag = soup.new_tag("strong")

# if 'text-decoration:underline' in tag_style:

# strong_tag['style'] = 'text-decoration:underline;'

# strong_tag.string = tag.string

# tag.replace_with(strong_tag)

# 增加下划线样式

if 'text-decoration:underline' in tag_style and (not tag.string is None):

u_tag = soup.new_tag("u")

u_tag['style'] = 'text-decoration:underline;'

u_tag.string = tag.string

tag.replace_with(u_tag)

# 增加居中样式

if 'text-align:center' in tag_style:

tag['style'] = 'text-align:center;'

# 增加右对齐样式

elif 'text-align:right' in tag_style:

tag['style'] = 'text-align:right;'

# 合并相同的标签

# if (not tag.previous_sibling is None) and (not tag.previous_sibling.string is None) and (tag.previous_sibling.name == tag.name) and (tag.previous_sibling.get('style') == tag.get('style')) and (not tag.string is None):

# tag.string.insert_before(tag.previous_sibling.string)

# if not tag.previous_sibling.name is None:

# tag.previous_sibling.decompose()

# 删除span标签

spans = soup.find_all('span')

for span in spans:

span.unwrap()

# 删除i标签

i_tags = soup.find_all('i')

for i_tag in i_tags:

i_tag.unwrap()

for tag in soup.find_all(True):

# 合并相同的标签

if (not tag.previous_sibling is None) and (not tag.previous_sibling.string is None) and (

tag.previous_sibling.name == tag.name) and (tag.previous_sibling.get('style') == tag.get('style')) and (

not tag.string is None):

tag.string.insert_before(tag.previous_sibling.string)

if not tag.previous_sibling.name is None:

tag.previous_sibling.decompose()

# 删除注释

for element in soup(text=lambda text: isinstance(text, Comment)):

element.extract()

return str(soup).encode().decode('utf-8')

# 保存格式化好的文件

def save_file(res, html_path):

with codecs.open(html_path, 'w+', 'utf-8') as out:

out.write(res)

out.close()

init()

另一个版本的:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143# -*- coding: utf-8 -*-

import codecs

from win32com import client as wc

import re

import chardet

from bs4 import BeautifulSoup

import os

from html.parser import HTMLParser

globalClsList = []

def init():

path = os.getcwd() # 文件夹目录

files = os.listdir(path) # 得到文件夹下的所有文件名称

html_dir = os.path.join(path, 'html')

try:

os.mkdir(html_dir)

except FileExistsError as e:

print(e)

for file in files: # 遍历文件夹

if os.path.isfile(file) and file.split('.')[1] == 'docx':

main(path, html_dir, file)

def main(word_dir, html_dir, word_name):

html_name = word_name.split('.')[0] + '.html'

html_name1 = word_name.split('.')[0] + '1.html'

html = word_to_html(os.path.join(word_dir, word_name), os.path.join(html_dir, html_name))

res = format_html(html)

parser = MyHTMLParser()

parser.feed(res)

res = str(my_beautiful_soup(res))

# print(len(globalClsList))

cls_list_text = ''

for cls in globalClsList:

# print(cls)

cls_text = '.cls%s{%s}'%(globalClsList.index(cls), cls)

cls_list_text = cls_list_text + cls_text

# print(cls_list_text)

cls_list_text = '<style>' + cls_list_text + '</style></head>'

res = re.sub(r'(</head>)', cls_list_text, res)

save_file(res, os.path.join(html_dir, html_name1))

# doc_path - word 文档地址 export_path - 导出的 html 地址

def word_to_html(doc_path, export_path):

try:

word = wc.Dispatch('Word.Application')

doc = word.Documents.Open(doc_path)

doc.SaveAs(export_path, 10)

finally:

if('doc' in dir()) and doc.Close:

doc.Close()

if('word' in dir()) and word.Quit:

word.Quit()

f = open(export_path, 'r')

str = f.read()

f.close()

return str

def format_html(res):

return res

# 格式化html

# res - 导出的 html

def format_html1(res):

res = re.sub(r'charset=[\w\d\-]+', 'charset=utf-8', res)

code_type = chardet.detect(res.encode('utf-8'))['encoding']

try:

res = res.encode('utf-8').decode(code_type)

except UnicodeDecodeError:

res = res.decode('gbk')

head = r'''<head>

<meta name="viewport" content="width=device-width,initial-scale=1,maximum-scale=1,minimum-scale=1,user-scalable=no">

<meta content="text/html; charset=utf-8" http-equiv="Content-Type"/>

</head>

'''

# 去除默认生成的 style 标签

res = re.sub(r'<style>[\s\S]*<\/style>', r'', res)

# 去除默认生成的 xml 标签

res = re.sub(r'<xml>[\s\S]*<\/xml>', r'', res)

res = re.sub(code_type, r'utf-8', res) # 改编码

# 添加meta标签

res = re.sub(r'<head>.*?<\/head>', head, res)

# 去掉行间样式 style

# res = re.sub(u'style=[\w\d;\-_.\.\s;,:#\"\'%\u4e00-\u9fa5]+>', '>', res)

# 去空 - 格式化

# res = re.sub(r' ', r'', res) # 去空格

res = re.sub(r'\s+', r' ', res) # 多个空格合并成一个

res = re.sub(r'\s+>', r'>', res) # 标签结尾的空格

res = re.sub(r'<span>\s{0,}<\/span>', r'', res) # 空的span标签

res = re.sub(r'>\s+<', r'><', res) # 去除标签之间的空格

res = re.sub(r'<font.*?>(.*?)<\/font>', r'\1', res) # 去除<font></font>

res = re.sub(r'<b>(.*?)<\/b>', r'\1', res) # 去除<b></b>

res = re.sub(r'<u>(.*?)<\/u>', r'\1', res) # 去除<u></u>

res = re.sub(r'<o(.*?)<\/o.*?>', r'', res) # 去除<o:p></o:p>

res = re.sub(r'<html.*?>(.*?)<\/html>', r'<!DOCTYPE html>\1</html>', res)

res = re.sub(r'mso-.*?;', r'', res) # 去除mso- 开头的属性

res = re.sub(r'class=".*?"', r'', res) # 去除默认添加的class

res = re.sub('font-family:([\u4e00-\u9fa5]*?);', r'', res) # 去除font-family: 等线;

res = re.sub(r'(:\d*?\.\d).*?(pt;)', r'\1\2', res) # 属性值只保留一位小数

return res

class MyHTMLParser(HTMLParser):

def handle_starttag(self, tag, attrs):

for attr in attrs:

if attr[0] == 'style':

if attr[1] not in globalClsList:

globalClsList.append(attr[1])

# print("Encountered a start tag:", tag)

def handle_endtag(self, tag):

pass

# print("Encountered an end tag :", tag)

def handle_data(self, data):

pass

# print("Encountered some data :", data)

# 合并内容相同的标签

def my_beautiful_soup(html):

soup = BeautifulSoup(html, "html.parser")

mso_tag = soup.find_all(style=True)

for tag in mso_tag:

tag_style = tag['style']

idx = globalClsList.index(tag_style)

tag['class'] = 'cls' + str(idx)

del tag['style']

if not tag.previous_sibling is None:

if tag.previous_sibling.name == tag.name and (not tag.previous_sibling['class'] is None) and tag.previous_sibling['class'] == tag['class']:

if (not tag.string is None) and (not tag.previous_sibling.string is None):

tag.string = tag.previous_sibling.string + tag.string

tag.previous_sibling.decompose()

return soup

# 保存格式化好的文件

def save_file(res, html_path):

with codecs.open(html_path, 'w+', 'utf-8') as out:

out.write(res)

out.close()

init()

1 | # -*- coding: utf-8 -*- |

6. 其他

用python创建 docx 文件

别人用js实现的word2html

python-docx

mammoth.js1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17#! /usr/bin/env python

# -*- coding: utf-8 -*-

from BeautifulSoup import BeautifulSoup

html = '''

<script>a</script>

baba

<script>b</script>

<h1>hi, world</h1>

'''

soup = BeautifulSoup('<script>a</script>baba<script>b</script><h1>')

[s.extract() for s in soup('script')]

# 或者: [s.extract() for s in soup.findAll('script')]

print soup

# 输出

# baba<h1></h1>1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22#! /usr/bin/env python

# -*- coding: utf-8 -*-

from BeautifulSoup import BeautifulSoup, Comment

data = """<div class="foo">

cat dog sheep goat

<!--

<p>test</p>

-->

</div>"""

soup = BeautifulSoup(data)

for element in soup(text=lambda text: isinstance(text, Comment)):

element.extract()

print soup.prettify()

# 输出结果:

# <div class="foo">

# cat dog sheep goat

# </div>